The system for generating random people's faces is based on the StyleGAN algorithm from NVIDIA, as well as software from NVIDIA CUDA. This is a pre-trained model with an excellent interpolation and unraveling system, capable of finding hidden factors of variation (for example, determining the position of a face, identity, and even more than 100 parameters).

How does the algorithm work?

StyleGAN's method of operation can really be called quite perfect. It opens up new possibilities for controlling the image generation process.

In fact, the algorithm starts with the studied constant input, and then adjusts the image style, passing each convolutional layer one after the other. This is how the generator directly controls the features of the image at any scale.

The main aim is to find all the hidden factors of variation and qualitatively increase the level of control.

Architecture

StyleGAN defines the high-level attributes of the image:

- The position of the face.

- The personality of a person.

- Gender.

- Hairstyle.

- Freckles and other details.

All this using a nonlinear transformation through a modified hidden vector, which is further adapted to different styles and variations using affine transformations.

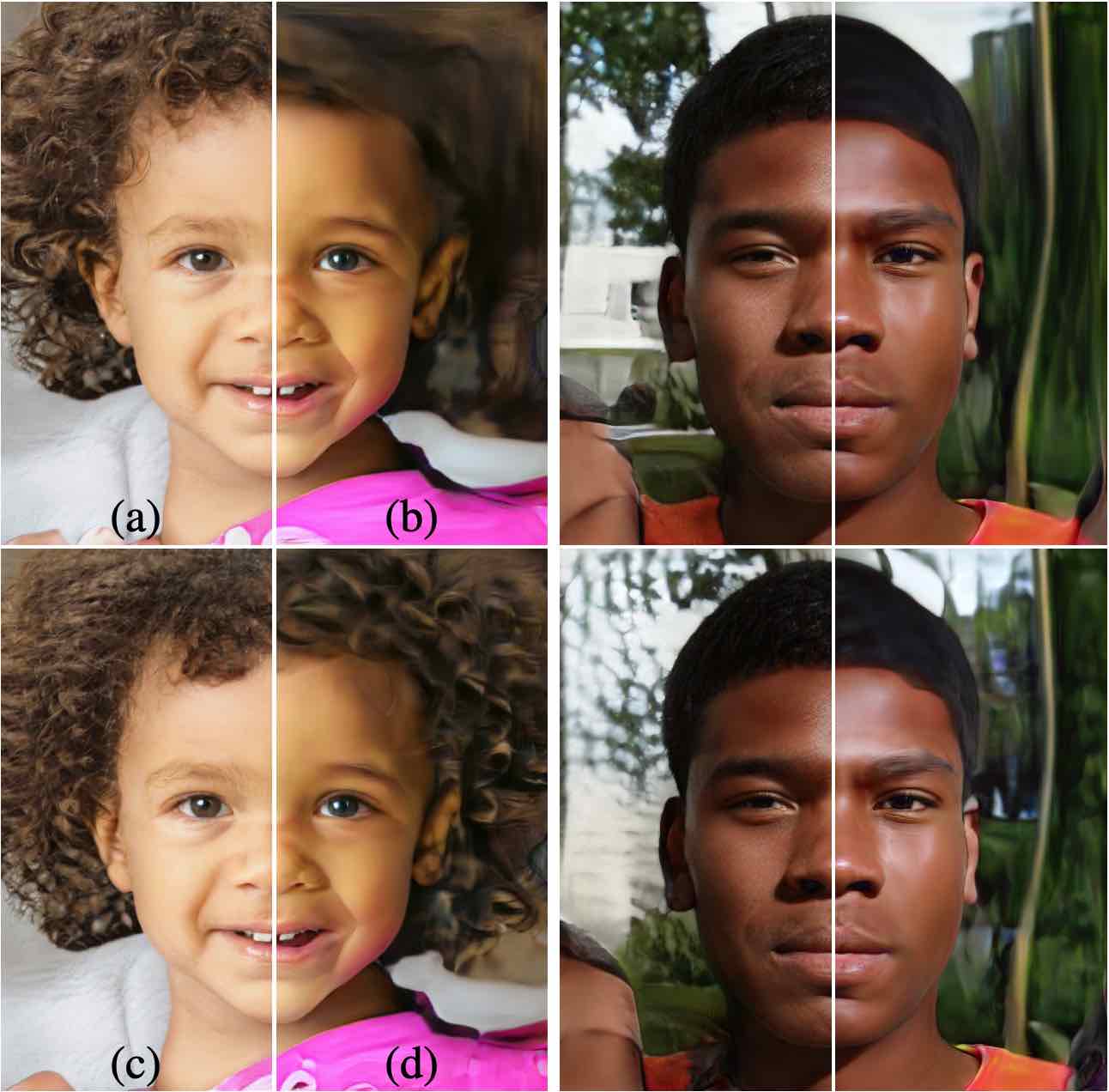

As for noise, it's just a single-channel image consisting of uncorrelated Gaussian noise. It is supplied to each iteration and adjusted based on the studied features. Consider:

A: Noise on all layers.

B: No noise.

C: Noise on thin layers.

D: Noise on thick layers.

Results and image quality

At the moment, the algorithm is trained to produce images of very good quality. We can say that a compromise between quality and interpolation capabilities has been found.

StyleGAN code

The source code is stored here: https://github.com/NVlabs/stylegan.

New dataset: https://github.com/NVlabs/ffhq-dataset.

All the data have been in the public domain since 2019.

The second version of StyleGAN2 was introduced in 2020.